Lacp cisco switch. Chapter 10. Configure physical switches for OpenStack Networking

Implementing Cisco IP Switched Networks (SWITCH) Foundation Learning Guide: Campus Network Architecture

This chapter from Implementing Cisco IP Switched Networks (SWITCH) Foundation Learning Guide: (CCNP SWITCH 300-115) covers implementing VLANs and trunks in campus switched architecture, understanding the concept of VTP and its limitation and configurations, and implementing and configuring EtherChannel.

From the Book

This chapter covers the following topics:

- Implementing VLANs and trunks in campus switched architecture

- Understanding the concept of VTP and its limitation and configurations

- Implementing and configuring EtherChannel

This chapter covers the key concepts of VLANs, trunking, and EtherChannel to build the campus switched networks. Knowing the function of VLANs and trunks and how to configure them is the core knowledge needed for building a campus switched network. VLANs can span across the whole network, or they can be configured to remain local. Also, VLANs play a critical role in the deployment of voice and wireless networks. Even though you might not be a specialist at one of those two fields, it is important to understand basics because both voice and wireless often rely on a basic switched network.

Once VLANs are created, their names and descriptions are stored in a VLAN database, with the exception of specific VLANs such as VLANs in the extended range in Cisco iOS for the Catalyst 6500. A mechanism called VLAN Trunking Protocol (VTP) dynamically distributes this information between switches. However, even if network administrators do not plan to enable VTP, it is important to consider its consequences.

EtherChannel can be used to bundle physical links in one virtual link, thus increasing throughput. There are multiple ways traffic can be distributed over the physical link within the EtherChannel.

Implementing VLANs and Trunks in Campus Environment

Within the switched internetwork, VLANs provide segmentation and organizational flexibility. VLANs help administrators to have the end node or workstations group that are segmented logically by functions, project teams, and applications, without regard to the physical location of the users. In addition, VLANs allow you to implement access and security policies to particular groups of users and limit the broadcast domain.

In addition, the voice VLAN feature enables access ports to carry IP voice traffic from an IP phone. Because the sound quality of an IP phone call can deteriorate if the data is unevenly sent, the switch supports quality of service (QoS).

This section discusses in detail how to plan, implement, and verify VLAN technologies and address schemes to meet the given business and technical requirements along with constraints. This ability includes being able to meet these objectives:

- Describe the different VLAN segmentation models

- Identify the basic differences between end-to-end and local VLANs

- Describe the benefits and drawbacks of local VLANs versus end-to-end VLANs

- Configure and verify VLANs

- Implement a trunk in a campus network

- Configure and verify trunks

- Explain switchport mode interactions

- Describe voice VLANs

- Configure voice VLANs

VLAN Overview

A VLAN is a logical broadcast domain that can span multiple physical LAN segments. Within the switched internetwork, VLANs provide segmentation and organizational flexibility. A VLAN can exist on a single switch or span multiple switches. VLANs can include (hosts or endnotes) stations in a single building or multiple-building infrastructures. As shown in Figure 3-1, sales, human resources, and engineering are three different VLANs spread across all three floors.

The Cisco Catalyst switch implements VLANs by only forwarding traffic to destination ports that are in the same VLAN as the originating ports. Each VLAN on the switches implements address learning, forwarding, and filtering decisions and loop-avoidance mechanisms, just as though the VLAN were a separate physical switch.

Ports in the same VLAN share broadcasts. Ports in different VLANs do not share broadcasts, as illustrated in Figure 3-2, where a PC 3 and PC 4 cannot ping because they are in different VLANs, whereas PC 1 and PC 2 can ping each other because they are part of the same VLAN. Containing broadcasts within a VLAN improves the overall performance of the network. Because a VLAN is a single broadcast domain, campus design best practices recommend mapping a VLAN generally to one IP subnet. To communicate between VLANs, packets need to pass through a router or Layer 3 device.

Inter-VLAN routing is discussed in detail in Chapter 5, “Inter-VLAN Routing.” Generally, a port carries traffic only for the single VLAN. For a VLAN to span multiple switches, Catalyst switches use trunks. A trunk carries traffic for multiple VLANs by using Inter-Switch Link (ISL) encapsulation or IEEE 802.1Q. This chapter discusses trunking in more detail in later sections. Because VLANs are an important aspect of any campus design, almost all Cisco devices support VLANs and trunking.

Most of the Cisco products support only 802.1Q trunking because 802.1Q is the industry standard. This book focuses only on 802.1Q.

VLAN Segmentation

Larger flat networks generally consist of many end devices in which broadcasts and unknown unicast packets are flooded on all ports in the network. One advantage of using VLANs is the capability to segment the Layer 2 broadcast domain. All devices in a VLAN are members of the same broadcast domain. If an end device transmits a Layer 2 broadcast, all other members of the VLAN receive the broadcast. Switches filter the broadcast from all the ports or devices that are not part of the same VLAN.

In a campus design, a network administrator can design a campus network with one of two models: end-to-end VLANs or local VLANs. Business and technical requirements, past experience, and political motivations can influence the design chosen. Choosing the right model initially can help create a solid foundation upon which to grow the business. Each model has its own advantages and disadvantages. When configuring a switch for an existing network, try to determine which model is used so that you can understand the logic behind each switch configuration and position in the infrastructure.

End-to-End VLANs

The term end-to-end VLAN refers to a single VLAN that is associated with switch ports widely dispersed throughout an enterprise network on multiple switches. A Layer 2 switched campus network carries traffic for this VLAN throughout the network, as shown in Figure 3-3, where VLANs 1, 2, and 3 are spread across all three switches.

If more than one VLAN in a network is operating in the end-to-end mode, special links (Layer 2 trunks) are required between switches to carry the traffic of all the different VLANs.

An end-to-end VLAN model has the following characteristics:

- Each VLAN is dispersed geographically throughout the network.

- Users are grouped into each VLAN regardless of the physical location.

- As a user moves throughout a campus, the VLAN membership of that user remains the same, regardless of the physical switch to which this user attaches.

- Users are typically associated with a given VLAN for network management reasons. This is why they are kept in the same VLAN, therefore the same group, as they move through the campus.

- All devices on a given VLAN typically have addresses on the same IP subnet.

- Switches commonly operate in a server/client VTP mode.

Local VLANs

The campus enterprise architecture is based on the local VLAN model. In a local VLAN model, all users of a set of geographically common switches are grouped into a single VLAN, regardless of the organizational function of those users. Local VLANs are generally confined to a wiring closet, as shown in Figure 3-4. In other words, these VLANs are local to a single access switch and connect via a trunk to an upstream distribution switch. If users move from one location to another in the campus, their connection changes to the new VLAN at the new physical location.

In the local VLAN model, Layer 2 switching is implemented at the access level, and routing is implemented at the distribution and core level, as shown in Figure 2-4, to enable users to maintain access to the resources they need. An alternative design is to extend routing to the access layer, and links between the access switches and distribution switches are routed links.

The following are some local VLAN characteristics and user guidelines:

- The network administrator should create local VLANs with physical boundaries in mind rather than the job functions of the users on the end devices.

- Generally, local VLANs exist between the access and distribution levels.

- Traffic from a local VLAN is routed at the distribution and core levels to reach destinations on other networks.

- Configure the VTP mode in transparent mode because VLANs on a given access switch should not be advertised to all other switches in the network, nor do they need to be manually created in any other switch VLAN databases.

VTP is discussed in more detail later in this chapter.

- A network that consists entirely of local VLANs can benefit from increased convergence times offered via routing protocols, instead of a spanning tree for Layer 2 networks. It is usually recommended to have one to three VLANs per access layer switch.

Comparison of End-to-End VLANs and Local VLANs

This subsection describes the benefits and drawbacks of local VLANs versus end-to-end VLANs.

Because a VLAN usually represents a Layer 3 segment, each end-to-end VLAN enables a single Layer 3 segment to be dispersed geographically throughout the network. The following could be some of the reasons for implementing the end-to-end design:

- Grouping users: Users can be grouped on a common IP segment, even though they are geographically dispersed. Recently, the trend has been moving toward virtualization. Solutions such as those from VMware need end-to-end VLANs to be spread across segments of the campus.

- Security: A VLAN can contain resources that should not be accessible to all users on the network, or there might be a reason to confine certain traffic to a particular VLAN.

- Applying quality of service (QoS): Traffic can be a higher- or lower-access priority to network resources from a given VLAN. Note that QoS may also be applied without the use of VLANs.

- Routing avoidance: If much of the VLAN user traffic is destined for devices on that same VLAN, and routing to those devices is not desirable, users can access resources on their VLAN without their traffic being routed off the VLAN, even though the traffic might traverse multiple switches.

- Special-purpose VLAN: Sometimes a VLAN is provisioned to carry a single type of traffic that must be dispersed throughout the campus (for example, multicast, voice, or visitor VLANs).

- Poor design: For no clear purpose, users are placed in VLANs that span the campus or even span WANs. Sometimes when a network is already configured and running, organizations are hesitant to improve the design because of downtime or other political reasons.

The following list details some considerations that the network administrators should consider when implementing end-to-end VLANs:

- Switch ports are provisioned for each user and associated with a given VLAN. Because users on an end-to-end VLAN can be anywhere in the network, all switches must be aware of that VLAN. This means that all switches carrying traffic for end-to-end VLANs are required to have those specific VLANs defined in each switch’s VLAN database.

- Also, flooded traffic for the VLAN is, by default, passed to every switch even if it does not currently have any active ports in the particular end-to-end VLAN.

- Finally, troubleshooting devices on a campus with end-to-end VLANs can be challenging because the traffic for a single VLAN can traverse multiple switches in a large area of the campus, and that can easily cause potential spanning-tree problems.

Based on the data presented in this section, there are many reasons to implement end-to-end VLANs. The main reason to implement local VLANs is simplicity. Local VLAN configures are quick and easy for small-scale networks.

Mapping VLANs to a Hierarchical Network

In the past, network designers have attempted to implement the 80/20 rule when designing networks. The rule was based on the observation that, in general, 80 percent of the traffic on a network segment was passed between local devices, and only 20 percent of the traffic was destined for remote network segments. Therefore, network architecture used to prefer end-to-end VLANs. To avoid the complications of end-to-end VLANs, designers now consolidate servers in central locations on the network and provide access to external resources, such as the Internet, through one or two paths on the network because the bulk of traffic now traverses a number of segments. Therefore, the paradigm now is closer to a 20/80 proportion, in which the greater flow of traffic leaves the local segment; so, local VLANs have become more efficient.

In addition, the concept of end-to-end VLANs was attractive when IP address configuration was a manually administered and burdensome process; therefore, anything that reduced this burden as users moved between networks was an improvement. However, given the ubiquity of Dynamic Host Configuration Protocol (DHCP), the process of configuring an IP address at each desktop is no longer a significant issue. As a result, there are few benefits to extending a VLAN throughout an enterprise (for example, if there are some clustering and other requirements).

Local VLANs are part of the enterprise campus architecture design, as shown in Figure 3-4, in which VLANs used at the access layer should extend no further than their associated distribution switch. For example, VLANs 1, 10 and VLANs 2, 20 are confined to only a local access switch. Traffic is then routed out the local VLAN as to the distribution layer and then to the core depending on the destination. It is usually recommended to have two to three VLANs per access block rather than span all the VLANs across all access blocks. This design can mitigate Layer 2 troubleshooting issues that occur when a single VLAN traverses the switches throughout a campus network. In addition, because Spanning Tree Protocol (STP) is configured for redundancy, the switch limits the STP to only the access and distribution switches that help to reduce the network complexity in times of failure.

Implementing the enterprise campus architecture design using local VLANs provides the following benefits:

- Deterministic traffic flow: The simple layout provides a predictable Layer 2 and Layer 3 traffic path. If a failure occurs that was not mitigated by the redundancy features, the simplicity of the model facilitates expedient problem isolation and resolution within the switch block.

- Active redundant paths: When implementing Per-VLAN Spanning Tree (PVST) or Multiple Spanning Tree (MST) because there is no loop, all links can be used to make use of the redundant paths.

- High availability: Redundant paths exist at all infrastructure levels. Local VLAN traffic on access switches can be passed to the building distribution switches across an alternative Layer 2 path if a primary path failure occurs. Router redundancy protocols can provide failover if the default gateway for the access VLAN fails. When both the STP instance and VLAN are confined to a specific access and distribution block, Layer 2 and Layer 3 redundancy measures and protocols can be configured to failover in a coordinated manner.

- Finite failure domain: If VLANs are local to a switch block, and the number of devices on each VLAN is kept small, failures at Layer 2 are confined to a small subset of users.

- Scalable design: Following the enterprise campus architecture design, new access switches can be easily incorporated, and new submodules can be added when necessary.

Implementing a Trunk in a Campus Environment

A trunk is a point-to-point link that carries the traffic for multiple VLANs across a single physical link between the two switches or any two devices. Trunking is used to extend Layer 2 operations across an entire network, such as end-to-end VLANs, as shown in Figure 3-5. PC 1 in VLAN 1 can communicate with the host in VLAN 21 on another switch over the single trunk link, the same as a host in VLAN 20 can communicate with a host in another switch in VLAN 20.

As discussed earlier in this chapter, to allow a switch port that connects two switches to carry more than one VLAN, it must be configured as a trunk. If frames from a single VLAN traverse a trunk link, a trunking protocol must mark the frame to identify its associated VLAN as the frame is placed onto the trunk link. The receiving switch then knows the frame’s VLAN origin and can process the frame accordingly. On the receiving switch, the VLAN ID (VID) is removed when the frame is forwarded on to an access link associated with its VLAN.

A special protocol is used to carry multiple VLANs over a single link between two devices. There are two trunking technologies:

- Inter-Switch Link (ISL): A Cisco proprietary trunking encapsulation

- IEEE 802.1Q: An industry-standard trunking method

ISL is a Cisco proprietary implementation. It is not widely used anymore.

When configuring an 802.1Q trunk, a matching native VLAN must be defined on each end of the trunk link. A trunk link is inherently associated with tagging each frame with a VID. The purpose of the native VLAN is to enable frames that are not tagged with a VID to traverse the trunk link. Native VLAN is discussed in more detail in a later part of this section.

Because the ISL protocol is almost obsolete, this book focuses only on 802.1Q. Figure 3-6 depicts how ISL encapsulates the normal Ethernet frame. Currently, all Catalyst switches support 802.1Q tagging for multiplexing traffic from multiple VLANs onto a single physical link.

IEEE 802.1Q trunk links employ the tagging mechanism to carry frames for multiple VLANs, in which each frame is tagged to identify the VLAN to which the frame belongs. Figure 3-7 shows the layout of the 802.1Q frame.

The IEEE 802.1Q/802.1p standard provides the following inherent architectural advantages over ISL:

- 802.1Q has smaller frame overhead than ISL. As a result, 802.1Q is more efficient than ISL, especially in the case of small frames. 802.1Q overhead is 4 bytes, whereas ISL is 30 bytes.

- 802.1Q is a widely supported industry standard protocol.

- 802.1Q has the support for 802.1p fields for QoS.

The 802.1Q Ethernet frame header contains the following fields:

- Dest: Destination MAC address (6 bytes)

- Src: Source MAC address (6 bytes)

- Tag: Inserted 802.1Q tag (4 bytes, detailed here)

- EtherType(TPID): Set to 0x8100 to specify that the 802.1Q tag follows.

- PRI: 3-bit 802.1p priority field.

- CFI: Canonical Format Identifier is always set to 0 for Ethernet switches and to 1 for Token Ring-type networks.

- VLAN ID: 12-bit VLAN field. Of the 4096 possible VLAN IDs, the maximum number of possible VLAN configurations is 4094. A VLAN ID of 0 indicates priority frames, and value 4095 (FFF) is reserved. CFI, PRI, and VLAN ID are represented as Tag Control Information (TCI) fields.

IEEE 802.1Q uses an internal tagging mechanism that modifies the original frame (as shown by the X over FCS in the original frame in Figure 3-7), recalculates the cyclic redundancy check (CRC) value for the entire frame with the tag, and inserts the new CRC value in a new FCS. ISL, in comparison, wraps the original frame and adds a second FCS that is built only on the header information but does not modify the original frame FCS.

IEEE 802.1p redefined the three most significant bits in the 802.1Q tag to allow for prioritization of the Layer 2 frame.

If a non-802.1Q-enabled device or an access port receives an 802.1Q frame, the tag data is ignored, and the packet is switched at Layer 2 as a standard Ethernet frame. This allows for the placement of Layer 2 intermediate devices, such as unmanaged switches or bridges, along the 802.1Q trunk path. To process an 802.1Q tagged frame, a device must enable a maximum transmission unit (MTU) of 1522 or higher.

Baby giants are frames that are larger than the standard MTU of 1500 bytes but less than 2000 bytes. Because ISL and 802.1Q tagged frames increase the MTU beyond 1500 bytes, switches consider both frames as baby giants. ISL-encapsulated packets over Ethernet have an MTU of 1548 bytes, whereas 802.1Q has an MTU of 1522 bytes.

Understanding Native VLAN in 802.1Q Trunking

The IEEE 802.1Q protocol allows operation between equipment from different vendors. All frames, except native VLAN, are equipped with a tag when traversing the link, as shown in Figure 3-8.

A frequent configuration error is to have different native VLANs. The native VLAN that is configured on each end of an 802.1Q trunk must be the same. If one end is configured for native VLAN 1 and the other for native VLAN 2, a frame that is sent in VLAN 1 on one side will be received on VLAN 2 on the other. VLAN 1 and VLAN 2 have been segmented and merged. There is no reason this should be required, and connectivity issues will occur in the network. If there is a native VLAN mismatch on either side of an 802.1Q link, Layer 2 loops may occur because VLAN 1 STP BPDUs are sent to the IEEE STP MAC address (0180.c200.0000) untagged.

Cisco switches use Cisco Discovery Protocol (CDP) to warn of a native VLAN mismatch. On select versions of Cisco iOS Software, CDP may not be transmitted or will be automatically turned off if VLAN 1 is disabled on the trunk.

By default, the native VLAN will be VLAN 1. For the purpose of security, the native VLAN on a trunk should be set to a specific VID that is not used for normal operations elsewhere on the network.

Switch(config-if)# switchport trunk native vlan vlan-ID

Cisco ISL does not have a concept of native VLAN. Traffic for all VLANs is tagged by encapsulating each frame.

Understanding DTP

All recent Cisco Catalyst switches, except for the Catalyst 2900XL and 3500XL, use a Cisco proprietary point-to-point protocol called Dynamic Trunking Protocol (DTP) on trunk ports to negotiate the trunking state. DTP negotiates the operational mode of directly connected switch ports to a trunk port and selects an appropriate trunking protocol. Negotiating trunking is a recommended practice in multilayer switched networks because it avoids network issues resulting from trunking misconfigurations for initial configuration, but best practice is when the network is stable, change to permanent trunk.

Cisco Trunking Modes and Methods

Table 3-1 describes the different trunking modes supported by Cisco switches.

Table 3-1 Trunking Modes

Mode in Cisco iOS

Puts the interface into permanent nontrunking mode and negotiates to convert the link into a nontrunk link. The interface becomes a nontrunk interface even if the neighboring interface does not agree to the change.

Puts the interface into permanent trunking mode and negotiates to convert the link into a trunk link. The interface becomes a trunk interface even if the neighboring interface does not agree to the change.

Prevents the interface from generating DTP frames. You must configure the local and neighboring interface manually as a trunk interface to establish a trunk link. Use this mode when connecting to a device that does not support DTP.

Makes the interface actively attempt to convert the link to a trunk link. The interface becomes a trunk interface if the neighboring interface is set to trunk, desirable, or auto mode.

Makes the interface willing to convert the link to a trunk link. The interface becomes a trunk interface if the neighboring interface is set to trunk or desirable mode. This is the default mode for all Ethernet interfaces in Cisco iOS.

The Cisco Catalyst 4000 and 4500 switches run Cisco iOS or Cisco CatOS depending on the Supervisor Engine model. The Supervisor Engines for the Catalyst 4000 and 4500 do not support ISL encapsulation on a per-port basis. Refer to the product documentation on Cisco.com for more details.

Figure 3-9 shows the combination of DTP modes between the two links. A combination of DTP modes can either make the port as an access port or trunk port.

Figure 3-9 Output from the SIMPLE Program

VLAN Ranges and Mappings

ISL supports VLAN numbers in the range of 1 to 1005, whereas 802.1Q VLAN numbers are in the range of 1 to 4094. The default behavior of VLAN trunks is to permit all normal and extended-range VLANs across the link if it is an 802.1Q interface and to permit normal VLANs in the case of an ISL interface.

VLAN Ranges

Cisco Catalyst switches support up to 4096 VLANs depending on the platform and software version. Table 3-2 illustrates the VLAN division for Cisco Catalyst switches. Table 3-3 shows VLAN ranges.

Table 3-2 VLAN Support Matrix for Catalyst Switches

Type of Switch

Maximum Number of VLANs

VLAN ID Range

Chapter 10. Configure physical switches for OpenStack Networking

This chapter documents the common physical switch configuration steps required for OpenStack Networking. Vendor-specific configuration is included for the following switches:

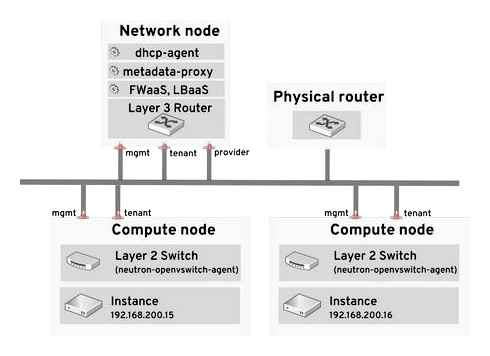

.1. Planning your physical network environment

The physical network adapters in your OpenStack nodes can be expected to carry different types of network traffic, such as instance traffic, storage data, or authentication requests. The type of traffic these NICs will carry affects how their ports are configured on the physical switch.

As a first step, you will need to decide which physical NICs on your Compute node will carry which types of traffic. Then, when the NIC is cabled to a physical switch port, that switch port will need to be specially configured to allow trunked or general traffic.

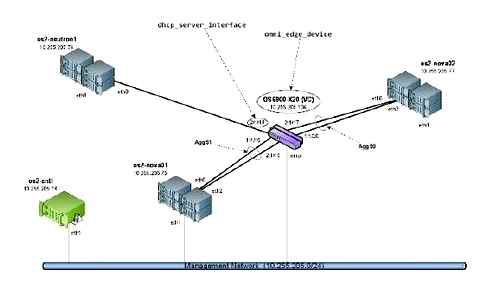

For example, this diagram depicts a Compute node with two NICs, eth0 and eth1. Each NIC is cabled to a Gigabit Ethernet port on a physical switch, with eth0 carrying instance traffic, and eth1 providing connectivity for OpenStack services:

Sample network layout

This diagram does not include any additional redundant NICs required for fault tolerance.

.2. Configure a Cisco Catalyst switch

10.2.1. Configure trunk ports

OpenStack Networking allows instances to connect to the VLANs that already exist on your physical network. The term trunk is used to describe a port that allows multiple VLANs to traverse through the same port. As a result, VLANs can span across multiple switches, including virtual switches. For example, traffic tagged as VLAN110 in the physical network can arrive at the Compute node, where the 8021q module will direct the tagged traffic to the appropriate VLAN on the vSwitch.

10.2.1.1. Configure trunk ports for a Cisco Catalyst switch

If using a Cisco Catalyst switch running Cisco iOS, you might use the following configuration syntax to allow traffic for VLANs 110 and 111 to pass through to your instances. This configuration assumes that your physical node has an ethernet cable connected to interface GigabitEthernet1/0/12 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

interface GigabitEthernet1/0/12 description Trunk to Compute Node spanning-tree portfast trunk switchport trunk encapsulation dot1q switchport mode trunk switchport trunk native vlan 2 switchport trunk allowed vlan 2,110,111

These settings are described below:

The switch port that the node’s NIC is plugged into. This is just an example, so it is important to first verify that you are configuring the correct port here. You can use the show interface command to view a list of ports.

description Trunk to Compute Node

The description that appears when listing all interfaces using the show interface command. Should be descriptive enough to let someone understand which system is plugged into this port, and what the connection’s intended function is.

spanning-tree portfast trunk

Assuming your environment uses STP, tells Port Fast that this port is used to trunk traffic.

switchport trunk encapsulation dot1q

Enables the 802.1q trunking standard (rather than ISL). This will vary depending on what your switch supports.

Configures this port as a trunk port, rather than an access port, meaning that it will allow VLAN traffic to pass through to the virtual switches.

switchport trunk native vlan 2

Setting a native VLAN tells the switch where to send untagged (non-VLAN) traffic.

switchport trunk allowed vlan 2,110,111

Defines which VLANs are allowed through the trunk.

10.2.2. Configure access ports

Not all NICs on your Compute node will carry instance traffic, and so do not need to be configured to allow multiple VLANs to pass through. These ports require only one VLAN to be configured, and might fulfill other operational requirements, such as transporting management traffic or Block Storage data. These ports are commonly known as access ports and usually require a simpler configuration than trunk ports.

10.2.2.1. Configure access ports for a Cisco Catalyst switch

Using the example from the Sample network layout diagram, GigabitEthernet1/0/13 (on a Cisco Catalyst switch) is configured as an access port for eth1. This configuration assumes that your physical node has an ethernet cable connected to interface GigabitEthernet1/0/12 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

interface GigabitEthernet1/0/13 description Access port for Compute Node switchport mode access switchport access vlan 200 spanning-tree portfast

These settings are described below:

The switch port that the node’s NIC is plugged into. The interface value is just an example, so it is important to first verify that you are configuring the correct port here. You can use the show interface command to view a list of ports.

description Access port for Compute Node

The description that appears when listing all interfaces using the show interface command. Should be descriptive enough to let someone understand which system is plugged into this port, and what the connection’s intended function is.

Configures this port as an access port, rather than a trunk port.

switchport access vlan 200

Configures the port to allow traffic on VLAN 200. Your Compute node should also be configured with an IP address from this VLAN.

If using STP, this tells STP not to attempt to initialize this as a trunk, allowing for quicker port handshakes during initial connections (such as server reboot).

10.2.3. Configure LACP port aggregation

LACP allows you to bundle multiple physical NICs together to form a single logical channel. Also known as 802.3ad (or bonding mode 4 in Linux), LACP creates a dynamic bond for load-balancing and fault tolerance. LACP must be configured at both physical ends: on the physical NICs, and on the physical switch ports.

10.2.3.1. Configure LACP on the physical NIC

Edit the /home/stack/network-environment.yaml file:

– type: linux_bond name: bond1 mtu: 9000 bonding_options:; members:. type: interface name: nic3 mtu: 9000 primary: true. type: interface name: nic4 mtu: 9000

Configure the Open vSwitch bridge to use LACP :

BondInterfaceOvsOptions: mode=802.3ad

For information on configuring network bonds, see the Advanced Overcloud Customization guide.

10.2.3.2. Configure LACP on a Cisco Catalyst switch

In this example, the Compute node has two NICs using VLAN 100:

Physically connect the Compute node’s two NICs to the switch (for example, ports 12 and 13).

Create the LACP port channel:

interface port-channel1 switchport access vlan 100 switchport mode access spanning-tree guard root

Configure switch ports 12 (Gi1/0/12) and 13 (Gi1/0/13):

sw01# config t Enter configuration commands, one per line. End with CNTL/Z. sw01(config) interface GigabitEthernet1/0/12 switchport access vlan 100 switchport mode access speed 1000 duplex full channel-group 10 mode active channel-protocol lacp interface GigabitEthernet1/0/13 switchport access vlan 100 switchport mode access speed 1000 duplex full channel-group 10 mode active channel-protocol lacp

Review your new port channel. The resulting output lists the new port-channel Po1. with member ports Gi1/0/12 and Gi1/0/13 :

sw01# show etherchannel summary Number of channel-groups in use: 1 Number of aggregators: 1 Group Port-channel Protocol Ports. 1 Po1(SD) LACP Gi1/0/12(D) Gi1/0/13(D)

Remember to apply your changes by copying the running-config to the startup-config: copy running-config startup-config.

10.2.4. Configure MTU settings

Certain types of network traffic might require that you adjust your MTU size. For example, jumbo frames (9000 bytes) might be suggested for certain NFS or iSCSI traffic.

MTU settings must be changed from end-to-end (on all hops that the traffic is expected to pass through), including any virtual switches. For information on changing the MTU in your OpenStack environment, see Chapter 11, Configure MTU Settings.

10.2.4.1. Configure MTU settings on a Cisco Catalyst switch

This example enables jumbo frames on your Cisco Catalyst 3750 switch.

Review the current MTU settings:

sw01# show system mtu System MTU size is 1600 bytes System Jumbo MTU size is 1600 bytes System Alternate MTU size is 1600 bytes Routing MTU size is 1600 bytes

MTU settings are changed switch-wide on 3750 switches, and not for individual interfaces. This command configures the switch to use jumbo frames of 9000 bytes. If your switch supports it, you might prefer to configure the MTU settings for individual interfaces.

sw01# config t Enter configuration commands, one per line. End with CNTL/Z. sw01(config)# system mtu jumbo 9000 Changes to the system jumbo MTU will not take effect until the next reload is done

Remember to save your changes by copying the running-config to the startup-config: copy running-config startup-config.

When possible, reload the switch to apply the change. This will result in a network outage for any devices that are dependent on the switch.

sw01# reload Proceed with reload? [confirm]

When the switch has completed its reload operation, confirm the new jumbo MTU size. The exact output may differ depending on your switch model, where System MTU might apply to non-Gigabit interfaces, and Jumbo MTU might describe all Gigabit interfaces.

sw01# show system mtu System MTU size is 1600 bytes System Jumbo MTU size is 9000 bytes System Alternate MTU size is 1600 bytes Routing MTU size is 1600 bytes

10.2.5. Configure LLDP discovery

The ironic-python-agent service listens for LLDP packets from connected switches. The collected information can include the switch name, port details, and available VLANs. Similar to Cisco Discovery Protocol (CDP), LLDP assists with the discovery of physical hardware during director’s introspection process.

10.2.5.1. Configure LLDP on a Cisco Catalyst switch

Use lldp run to enable LLDP globally on your Cisco Catalyst switch:

sw01# config t Enter configuration commands, one per line. End with CNTL/Z. sw01(config)# lldp run

View any neighboring LLDP-compatible devices:

sw01# show lldp neighbor Capability codes: (R) Router, (B) Bridge, (T) Telephone, (C) DOCSIS Cable Device (W) WLAN Access Point, (P) Repeater, (S) Station, (O) Other Device ID Local Intf Hold-time Capability Port ID DEP42037061562G3 Gi1/0/11 180 B,T 422037061562G3:P1 Total entries displayed: 1

Remember to save your changes by copying the running-config to the startup-config: copy running-config startup-config.

.3. Configure a Cisco Nexus switch

10.3.1. Configure trunk ports

OpenStack Networking allows instances to connect to the VLANs that already exist on your physical network. The term trunk is used to describe a port that allows multiple VLANs to traverse through the same port. As a result, VLANs can span across multiple switches, including virtual switches. For example, traffic tagged as VLAN110 in the physical network can arrive at the Compute node, where the 8021q module will direct the tagged traffic to the appropriate VLAN on the vSwitch.

10.3.1.1. Configure trunk ports for a Cisco Nexus switch

If using a Cisco Nexus you might use the following configuration syntax to allow traffic for VLANs 110 and 111 to pass through to your instances. This configuration assumes that your physical node has an ethernet cable connected to interface Ethernet1/12 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

interface Ethernet1/12 description Trunk to Compute Node switchport mode trunk switchport trunk allowed vlan 2,110,111 switchport trunk native vlan 2 end

10.3.2. Configure access ports

Not all NICs on your Compute node will carry instance traffic, and so do not need to be configured to allow multiple VLANs to pass through. These ports require only one VLAN to be configured, and might fulfill other operational requirements, such as transporting management traffic or Block Storage data. These ports are commonly known as access ports and usually require a simpler configuration than trunk ports.

10.3.2.1. Configure access ports for a Cisco Nexus switch

Using the example from the Sample network layout diagram, Ethernet1/13 (on a Cisco Nexus switch) is configured as an access port for eth1. This configuration assumes that your physical node has an ethernet cable connected to interface Ethernet1/13 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

interface Ethernet1/13 description Access port for Compute Node switchport mode access switchport access vlan 200

10.3.3. Configure LACP port aggregation

LACP allows you to bundle multiple physical NICs together to form a single logical channel. Also known as 802.3ad (or bonding mode 4 in Linux), LACP creates a dynamic bond for load-balancing and fault tolerance. LACP must be configured at both physical ends: on the physical NICs, and on the physical switch ports.

10.3.3.1. Configure LACP on the physical NIC

Edit the /home/stack/network-environment.yaml file:

– type: linux_bond name: bond1 mtu: 9000 bonding_options:; members:. type: interface name: nic3 mtu: 9000 primary: true. type: interface name: nic4 mtu: 9000

Configure the Open vSwitch bridge to use LACP :

BondInterfaceOvsOptions: mode=802.3ad

For information on configuring network bonds, see the Advanced Overcloud Customization guide.

10.3.3.2. Configure LACP on a Cisco Nexus switch

In this example, the Compute node has two NICs using VLAN 100:

Physically connect the Compute node’s two NICs to the switch (for example, ports 12 and 13).

Confirm that LACP is enabled:

(config)# show feature | include lacp lacp 1 enabled

Configure ports 1/12 and 1/13 as access ports, and as members of a channel group. Depending on your deployment, you might deploy to use trunk interfaces rather than access interfaces. For example, for Cisco UCI the NICs are virtual interfaces, so you might prefer to set up all access ports. In addition, there will likely be VLAN tagging configured on the interfaces.

interface Ethernet1/13 description Access port for Compute Node switchport mode access switchport access vlan 200 channel-group 10 mode active interface Ethernet1/13 description Access port for Compute Node switchport mode access switchport access vlan 200 channel-group 10 mode active

10.3.4. Configure MTU settings

Certain types of network traffic might require that you adjust your MTU size. For example, jumbo frames (9000 bytes) might be suggested for certain NFS or iSCSI traffic.

MTU settings must be changed from end-to-end (on all hops that the traffic is expected to pass through), including any virtual switches. For information on changing the MTU in your OpenStack environment, see Chapter 11, Configure MTU Settings.

10.3.4.1. Configure MTU settings on a Cisco Nexus 7000 switch

MTU settings can be applied to a single interface on 7000-series switches. These commands configure interface 1/12 to use jumbo frames of 9000 bytes:

interface ethernet 1/12 mtu 9216 exit

10.3.5. Configure LLDP discovery

The ironic-python-agent service listens for LLDP packets from connected switches. The collected information can include the switch name, port details, and available VLANs. Similar to Cisco Discovery Protocol (CDP), LLDP assists with the discovery of physical hardware during director’s introspection process.

10.3.5.1. Configure LLDP on a Cisco Nexus 7000 switch

LLDP can be enabled for individual interfaces on Cisco Nexus 7000-series switches:

interface ethernet 1/12 lldp transmit lldp receive no lacp suspend-individual no lacp graceful-convergence interface ethernet 1/13 lldp transmit lldp receive no lacp suspend-individual no lacp graceful-convergence

Remember to save your changes by copying the running-config to the startup-config: copy running-config startup-config.

.4. Configure a Cumulus Linux switch

10.4.1. Configure trunk ports

OpenStack Networking allows instances to connect to the VLANs that already exist on your physical network. The term trunk is used to describe a port that allows multiple VLANs to traverse through the same port. As a result, VLANs can span across multiple switches, including virtual switches. For example, traffic tagged as VLAN110 in the physical network can arrive at the Compute node, where the 8021q module will direct the tagged traffic to the appropriate VLAN on the vSwitch.

10.4.1.1. Configure trunk ports for a Cumulus Linux switch

If using a Cumulus Linux switch, you might use the following configuration syntax to allow traffic for VLANs 100 and 200 to pass through to your instances. This configuration assumes that your physical node has transceivers connected to switch ports swp1 and swp2 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

auto bridge iface bridge bridge-vlan-aware yes bridge-ports glob swp1-2 bridge-vids 100 200

10.4.2. Configure access ports

Not all NICs on your Compute node will carry instance traffic, and so do not need to be configured to allow multiple VLANs to pass through. These ports require only one VLAN to be configured, and might fulfill other operational requirements, such as transporting management traffic or Block Storage data. These ports are commonly known as access ports and usually require a simpler configuration than trunk ports.

10.4.2.1. Configuring access ports for a Cumulus Linux switch

Using the example from the Sample network layout diagram, swp1 (on a Cumulus Linux switch) is configured as an access port. This configuration assumes that your physical node has an ethernet cable connected to the interface on the physical switch. Cumulus Linux switches use eth for management interfaces and swp for access/trunk ports.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

auto bridge iface bridge bridge-vlan-aware yes bridge-ports glob swp1-2 bridge-vids 100 200 auto swp1 iface swp1 bridge-access 100 auto swp2 iface swp2 bridge-access 200

10.4.3. Configure LACP port aggregation

LACP allows you to bundle multiple physical NICs together to form a single logical channel. Also known as 802.3ad (or bonding mode 4 in Linux), LACP creates a dynamic bond for load-balancing and fault tolerance. LACP must be configured at both physical ends: on the physical NICs, and on the physical switch ports.

10.4.3.1. Configure LACP on the physical NIC

There is no need to configure the physical NIC in Cumulus Linux.

10.4.3.2. Configure LACP on a Cumulus Linux switch

To configure the bond, edit /etc/network/interfaces and add a stanza for bond0 :

auto bond0 iface bond0 address 10.0.0.1/30 bond-slaves swp1 swp2 swp3 swp4

Remember to apply your changes by reloading the updated configuration: sudo ifreload.a

10.4.4. Configure MTU settings

Certain types of network traffic might require that you adjust your MTU size. For example, jumbo frames (9000 bytes) might be suggested for certain NFS or iSCSI traffic.

MTU settings must be changed from end-to-end (on all hops that the traffic is expected to pass through), including any virtual switches. For information on changing the MTU in your OpenStack environment, see Chapter 11, Configure MTU Settings.

10.4.4.1. Configure MTU settings on a Cumulus Linux switch

This example enables jumbo frames on your Cumulus Linux switch.

auto swp1 iface swp1 mtu 9000

Remember to apply your changes by reloading the updated configuration: sudo ifreload.a

10.4.5. Configure LLDP discovery

By default, the LLDP service, lldpd, runs as a daemon and starts when the switch boots.

To view all LLDP neighbors on all ports/interfaces:

cumulus@switch netshow lldp Local Port Speed Mode Remote Port Remote Host Summary. eth0 10G Mgmt swp6 mgmt-sw IP: 10.0.1.11/24 swp51 10G Interface/L3 swp1 spine01 IP: 10.0.0.11/32 swp52 10G Interface/L swp1 spine02 IP: 10.0.0.11/32

.5. Configure an Extreme Networks EXOS switch

10.5.1. Configure trunk ports

OpenStack Networking allows instances to connect to the VLANs that already exist on your physical network. The term trunk is used to describe a port that allows multiple VLANs to traverse through the same port. As a result, VLANs can span across multiple switches, including virtual switches. For example, traffic tagged as VLAN110 in the physical network can arrive at the Compute node, where the 8021q module will direct the tagged traffic to the appropriate VLAN on the vSwitch.

10.5.1.1. Configure trunk ports on an Extreme Networks EXOS switch

If using an X-670 series switch, you might refer to the following example to allow traffic for VLANs 110 and 111 to pass through to your instances. This configuration assumes that your physical node has an ethernet cable connected to interface 24 on the physical switch. In this example, DATA and MNGT are the VLAN names.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

#create vlan DATA tag 110 #create vlan MNGT tag 111 #configure vlan DATA add ports 24 tagged #configure vlan MNGT add ports 24 tagged

10.5.2. Configure access ports

Not all NICs on your Compute node will carry instance traffic, and so do not need to be configured to allow multiple VLANs to pass through. These ports require only one VLAN to be configured, and might fulfill other operational requirements, such as transporting management traffic or Block Storage data. These ports are commonly known as access ports and usually require a simpler configuration than trunk ports.

10.5.2.1. Configure access ports for an Extreme Networks EXOS switch

To continue the example from the diagram above, this example configures 10 (on a Extreme Networks X-670 series switch) as an access port for eth1. you might use the following configuration to allow traffic for VLANs 110 and 111 to pass through to your instances. This configuration assumes that your physical node has an ethernet cable connected to interface 10 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

create vlan VLANNAME tag NUMBER configure vlan Default delete ports PORTSTRING configure vlan VLANNAME add ports PORTSTRING untagged

#create vlan DATA tag 110 #configure vlan Default delete ports 10 #configure vlan DATA add ports 10 untagged

10.5.3. Configure LACP port aggregation

LACP allows you to bundle multiple physical NICs together to form a single logical channel. Also known as 802.3ad (or bonding mode 4 in Linux), LACP creates a dynamic bond for load-balancing and fault tolerance. LACP must be configured at both physical ends: on the physical NICs, and on the physical switch ports.

10.5.3.1. Configure LACP on the physical NIC

Edit the /home/stack/network-environment.yaml file:

– type: linux_bond name: bond1 mtu: 9000 bonding_options:; members:. type: interface name: nic3 mtu: 9000 primary: true. type: interface name: nic4 mtu: 9000

Configure the Open vSwitch bridge to use LACP :

BondInterfaceOvsOptions: mode=802.3ad

For information on configuring network bonds, see the Advanced Overcloud Customization guide.

10.5.3.2. Configure LACP on an Extreme Networks EXOS switch

In this example, the Compute node has two NICs using VLAN 100:

enable sharing MASTERPORT grouping ALL_LAG_PORTS lacp configure vlan VLANNAME add ports PORTSTRING tagged

#enable sharing 11 grouping 11,12 lacp #configure vlan DATA add port 11 untagged

10.5.4. Configure MTU settings

Certain types of network traffic might require that you adjust your MTU size. For example, jumbo frames (9000 bytes) might be suggested for certain NFS or iSCSI traffic.

MTU settings must be changed from end-to-end (on all hops that the traffic is expected to pass through), including any virtual switches. For information on changing the MTU in your OpenStack environment, see Chapter 11, Configure MTU Settings.

10.5.4.1. Configure MTU settings on an Extreme Networks EXOS switch

The example enables jumbo frames on any Extreme Networks EXOS switch, and supports forwarding IP packets with 9000 bytes:

enable jumbo-frame ports PORTSTRING configure ip-mtu 9000 vlan VLANNAME

# enable jumbo-frame ports 11 # configure ip-mtu 9000 vlan DATA

10.5.5. Configure LLDP discovery

The ironic-python-agent service listens for LLDP packets from connected switches. The collected information can include the switch name, port details, and available VLANs. Similar to Cisco Discovery Protocol (CDP), LLDP assists with the discovery of physical hardware during director’s introspection process.

10.5.5.1. Configure LLDP settings on an Extreme Networks EXOS switch

The example allows configuring LLDP settings on any Extreme Networks EXOS switch. In this example, 11 represents the port string:

.6. Configure a Juniper EX Series switch

10.6.1. Configure trunk ports

OpenStack Networking allows instances to connect to the VLANs that already exist on your physical network. The term trunk is used to describe a port that allows multiple VLANs to traverse through the same port. As a result, VLANs can span across multiple switches, including virtual switches. For example, traffic tagged as VLAN110 in the physical network can arrive at the Compute node, where the 8021q module will direct the tagged traffic to the appropriate VLAN on the vSwitch.

10.6.1.1. Configure trunk ports on the Juniper EX Series switch

If using a Juniper EX series switch running Juniper JunOS, you might use the following configuration to allow traffic for VLANs 110 and 111 to pass through to your instances. This configuration assumes that your physical node has an ethernet cable connected to interface ge-1/0/12 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

10.6.2. Configure access ports

Not all NICs on your Compute node will carry instance traffic, and so do not need to be configured to allow multiple VLANs to pass through. These ports require only one VLAN to be configured, and might fulfill other operational requirements, such as transporting management traffic or Block Storage data. These ports are commonly known as access ports and usually require a simpler configuration than trunk ports.

10.6.2.1. Configure access ports for a Juniper EX Series switch

To continue the example from the diagram above, this example configures ge-1/0/13 (on a Juniper EX series switch) as an access port for eth1. This configuration assumes that your physical node has an ethernet cable connected to interface ge-1/0/13 on the physical switch.

These values are examples only. Simply copying and pasting into your switch configuration without adjusting the values first will likely result in an unexpected outage.

10.6.3. Configure LACP port aggregation

LACP allows you to bundle multiple physical NICs together to form a single logical channel. Also known as 802.3ad (or bonding mode 4 in Linux), LACP creates a dynamic bond for load-balancing and fault tolerance. LACP must be configured at both physical ends: on the physical NICs, and on the physical switch ports.

10.6.3.1. Configure LACP on the physical NIC

Edit the /home/stack/network-environment.yaml file:

– type: linux_bond name: bond1 mtu: 9000 bonding_options:; members:. type: interface name: nic3 mtu: 9000 primary: true. type: interface name: nic4 mtu: 9000

Configure the Open vSwitch bridge to use LACP :

BondInterfaceOvsOptions: mode=802.3ad

For information on configuring network bonds, see the Advanced Overcloud Customization guide.

10.6.3.2. Configure LACP on a Juniper EX Series switch

In this example, the Compute node has two NICs using VLAN 100:

Physically connect the Compute node’s two NICs to the switch (for example, ports 12 and 13).

Create the port aggregate:

Configure switch ports 12 (ge-1/0/12) and 13 (ge-1/0/13) to join the port aggregate ae1 :

For Red Hat OpenStack Platform director deployments, in order to PXE boot from the bond, you need to set one of the bond members as lacp force-up. This will ensure that one bond member only comes up during introspection and first boot. The bond member set with lacp force-up should be the same bond member that has the MAC address in instackenv.json (the MAC address known to ironic must be the same MAC address configured with force-up ).

Enable LACP on port aggregate ae1 :

Add aggregate ae1 to VLAN 100:

Review your new port channel. The resulting output lists the new port aggregate ae1 with member ports ge-1/0/12 and ge-1/0/13 :

show lacp statistics interfaces ae1 Aggregated interface: ae1 LACP Statistics: LACP Rx LACP Tx Unknown Rx Illegal Rx ge-1/0/12 0 0 0 0 ge-1/0/13 0 0 0 0

Remember to apply your changes by running the commit command.

10.6.4. Configure MTU settings

Certain types of network traffic might require that you adjust your MTU size. For example, jumbo frames (9000 bytes) might be suggested for certain NFS or iSCSI traffic.

MTU settings must be changed from end-to-end (on all hops that the traffic is expected to pass through), including any virtual switches. For information on changing the MTU in your OpenStack environment, see Chapter 11, Configure MTU Settings.

10.6.4.1. Configure MTU settings on a Juniper EX Series switch

This example enables jumbo frames on your Juniper EX4200 switch.

The MTU value is calculated differently depending on whether you are using Juniper or Cisco devices. For example, 9216 on Juniper would equal to 9202 for Cisco. The extra bytes are used for L2 headers, where Cisco adds this automatically to the MTU value specified, but the usable MTU will be 14 bytes smaller than specified when using Juniper. So in order to support an MTU of 9000 on the VLANs, the MTU of 9014 would have to be configured on Juniper.

For Juniper EX series switches, MTU settings are set for individual interfaces. These commands configure jumbo frames on the ge-1/0/14 and ge-1/0/15 ports:

set interfaces ge-1/0/14 mtu 9216 set interfaces ge-1/0/15 mtu 9216

Remember to save your changes by running the commit command.

If using a LACP aggregate, you will need to set the MTU size there, and not on the member NICs. For example, this setting configures the MTU size for the ae1 aggregate:

set interfaces ae1 mtu 9216

10.6.5. Configure LLDP discovery

The ironic-python-agent service listens for LLDP packets from connected switches. The collected information can include the switch name, port details, and available VLANs. Similar to Cisco Discovery Protocol (CDP), LLDP assists with the discovery of physical hardware during director’s introspection process.

10.6.5.1. Configure LLDP on a Juniper EX Series switch

You can enable LLDP globally for all interfaces, or just for individual ones:

For example, to enable LLDP globally on your Juniper EX 4200 switch:

Or, enable LLDP just for the single interface ge-1/0/14 :

Remember to apply your changes by running the commit command.

Cisco EtherChannel Protocols and Configuration

In this Cisco CCNA tutorial, you’ll learn about the different EtherChannel Protocols. I’ll also discuss how to configure and verify them. Scroll down for the video and also text tutorial.

Stacy Reeves

I wanted to let you know that 2 weeks ago I tested and passed with a good score. I was very happy coming out of the testing center! Thank you for all the help!

There are three available protocols:

- Link Aggregation Control Protocol (LACP)

- Port Aggregation Protocol (PAgP)

- Static EtherChannel

EtherChannel Protocols – LACP

LACP or the Link Aggregation Control Protocol is an open standard. It is supported in all vendor switches. With LACP, the switches on both sides negotiate the port channel creation and maintenance. Of the three available methods, this is the preferred one to use.

EtherChannel Protocols – PAgP

Next one we have is PAgP, which is Cisco’s Proprietary Port Aggregation Protocol. This works similarly to LACP where the switches on both sides negotiate the port channel creation and maintenance. It’s not recommended to use PAgP because it’s proprietary.

Finally, the third option we have is to configure a Static EtherChannel. With Static, the switches do not negotiate creation and maintenance, but the settings must still match on both sides for the port channel to come up.

With all three, you’ve got two switches that have got links going between them. You’re going to configure those into a port channel and you need to configure the same settings on both sides, on both switches.

We will use Static if LACP is not supported on both sides. LACP will be supported on all Cisco switches, but maybe you’re connecting to another vendor switch or server.

EtherChannel Parameters

The configuration for the protocols is really similar. They all actually use the same command, which is the ‘channel-group’ command. It’s on the keywords that we use along with channel-group that decides which of the three protocols is going to be used.

The parameters for EtherChannel has to match on both sides of the link. The interfaces need to have a matching configuration. The settings that have to be the same on both sides, include the speed and duplex, whether the port is set to Access or Trunk mode, the native VLAN, the allowed VLANs, and the Access VLAN if it’s an access port.

LACP Configuration

LACP interfaces can be set as either Active or Passive. Let’s say we’re going to configure a port-channel between SW1 and SW2. If SW1’s interfaces are set as Active and SW2’s as Passive, the port channel will come up. But if both sides are set as Passive, the port channel will not come up, and if both sides are Active, the port channel will come up.

It’s recommended to configure both sides as Active. Your choices are, either both sides as Active or one side as Active and one side as Passive. It’s easiest just to configure both as Active because you don’t need to worry about which is the Active side and which is the Passive side.

It is configured at the interface level, therefore, specify the interfaces you want to group into the port channel. Here, we enter the commands:

channel-group 1 mode active

As soon as you enter the commands, it will create a new logical interface which is your port channel interface. Here, we’re configuring channel-group 1 so that would create port channel 1. The reason that there’s a number here is that you might have different port channels going to different neighbor switches.

Let’s say that we are SW1 and we’ve got a port channel going to SW2. We could make that port channel 1. If we’ve also got you a port channel going to SW3, we could make that port channel 2.

After the port channel has been created, most of your interface settings are set at the port channel level. To do that, we say:

We would also set native VLAN, the allowed VLANs, etc., at this level. We need to configure those matching settings on the switch on the other side as well. We did it first on SW1, and then we do a matching configuration on SW2.

PAgP Configuration

The configuration for PAgP is the same, but rather than using Active or Passive, we use Desirable or Auto. It’s similar to the rules that we had with LACP where if one state is Desirable and the other is Auto, then the port channel will come up. If both sides are Auto, it won’t and if both sides are Desirable, it will.

Again, if you are going to use PAgP, which is not recommended, then set both sides as Desirable. In such a way, you don’t need to worry about which is Desirable and which is Auto.

Looking at the config below, it is exactly the same as it was for LACP. But here, we say:

channel-group1 mode desirable

You can tell by the keyword at the end whether it’s going to be LACP, PAgP, or Static.

Static Configuration

For Static, again, it’s exactly the same configuration, but we say:

The rest of the configuration, exactly the same for all of the protocols, except for the channel group mode:

Verification – show etherchannel summary

For the verification, there’s one Swiss Army knife command for checking EtherChannel. That is:

show etherchannel summary

The Flags below tells you what all the letters actually mean. Looking down at the bottom, we can see that this is group 1 and our 1st port channel. The port channel interface is port channel 1, and in brackets, I see a capital letter S and U.

The capital letter S means that it’s a Layer 2 port channel. You can configure a Layer 3 port channel as well. That’s where on the interface, you would say ‘ no switchport ’ and put an IP address on there. commonly, it’s going to be a Layer 2 port channel.

The capital letter U means it’s in use, which basically means that it is up. I can see that the protocol is LACP, and over on the right, I can see that my ports are FastEthernet 0/23 and 0/24. A capital P means that they are in the port channel.

For a layer two port channel, if you see any letters other than exactly what you see here, there is a problem with the port channel and it’s not going to come up. If there is a problem with your port channel, by far, the most common issue is that your settings do not match on both sides.

So, look at the interface, both at the physical interface level and also at the port channel interface level. Make sure that the settings are exactly the same on both sides. Also, make sure that you’ve selected the correct interfaces that are cabled to each other as well.

You can also do a ‘ show etherchannel ’. That will give you more verbose output, but the summary really tells you everything that you need to know.

Verification – show spanning-tree vlan

The last command to look at is the ‘ show spanning-tree vlan ’. As we explained before, the reason for using EtherChannel is to avoid spanning-tree shutting down some of your links. So, after you’ve configured EtherChannel, you want to check that spanning-tree is working as you would like.

The example below is before we’ve configured EtherChannel. If you look at the picture over on the right, we’ve got a switch Acc3 with uplinks going to our switch CD1, the core distribution 1. CD1 is the root bridge for spanning-tree.

If we look at Acc3, it’s got 2 links going up to CD1. If we don’t put those into a port channel, then spanning-tree sees them as a potential loop and it’s going to block one of the links.

Looking at the output of our ‘ show spanning-tree vlan 1 ’ command, down on the bottom I can see that Fa0/23 is forwarding. It’s the root port on Acc3, and Fa0/24 is blocking to prevent the loop. I’m only getting 100 Mbps of uplink bandwidth, rather than 200 Mbps with my 2 ports. To fix that, we configure EtherChannel.

After configuring EtherChannel, we can put the same command in again:

Before the port channel, you see that spanning-tree still has the physical interfaces, Fa0/23 and Fa0/24. When we put those in a port channel, spanning-tree just sees that one logical interface. It sees one link which is not a potential loop as far as spanning-tree is concerned.

Now on the port channel, it is forwarding. I don’t have any ports that are blocking. I get the full bandwidth of both physical interfaces.

Cisco EtherChannel Protocols Configuration Example

This configuration example is taken from my free ‘Cisco CCNA Lab Guide’ which includes over 350 pages of lab exercises and full instructions to set up the lab for free on your laptop.

LACP EtherChannel Configuration

- The access layer switches Acc3 and Acc4 both have two FastEthernet uplinks. How much total bandwidth is available between the PCs attached to Acc3 and the PCs attached to Acc4?

Spanning tree shuts down all but one uplink on both switches so the total bandwidth available between them is a single FastEthernet link – 100 Mbps.

Convert the existing uplinks from Acc3 to CD1 and CD2 to LACP EtherChannel. Configure descriptions on the port channel interfaces to help avoid confusion later.

The uplinks go to two separate redundant switches at the core/distribution layer so we need to configure two EtherChannels, one to CD1 and one to CD2.

We’ll configure the Acc3 side of the EtherChannel to CD1 first. Don’t forget to set the native VLAN on the new port channel interface.

Acc3(config)#interface range f0/23. 24

Acc3(config-if-range)#channel-group 1 mode active

Acc3(config)#interface port-channel 1

Acc3(config-if)#description Link to CD1

Acc3(config-if)#switchport mode trunk

Acc3(config-if)#switchport trunk native vlan 199

Then configure switch CD1 with matching settings.

CD1(config)#interface range f0/23. 24

CD1(config-if-range)#channel-group 1 mode active

CD1(config)#interface port-channel 1

CD1(config-if)#description Link to Acc3

CD1(config-if)#switchport mode trunk

CD1(config-if)#switchport trunk native vlan 199

Next configure the Acc3 side of the EtherChannel to CD2. Remember to use a different port channel number.

Acc3(config)#interface range f0/21. 22

Acc3(config-if-range)#channel-group 2 mode active

Acc3(config)#interface port-channel 2

Acc3(config-if)#description Link to CD2

Acc3(config-if)#switchport mode trunk

Acc3(config-if)#switchport trunk native vlan 199

Then configure switch CD2 with matching settings.

CD2(config)#interface range f0/21. 22

CD2(config-if-range)#channel-group 2 mode active

CD2(config)#interface port-channel 2

CD2(config-if)#description Link to Acc3

CD2(config-if)#switchport mode trunk

CD2(config-if)#switchport trunk native vlan 199

Verify the EtherChannels come up.

The port channels should show flags (SU) (Layer 2, in use) with member ports (P) (in port-channel). Verify on both sides of the port channel.

PAgP EtherChannel Configuration

Convert the existing uplinks from Acc4 to CD1 and CD2 to PAgP EtherChannel. (Note that in a real world environment you should always use LACP if possible.)

It’s good practice to use the same port channel number on both sides of the link. CD1 is already using port channel 1 to Acc3, and CD2 is using port channel 2 to Acc3. From Acc4 to CD1 we’ll use port channel 2, and from Acc4 to CD2 we’ll use port channel 1.

We’ll configure the Acc4 side of the EtherChannel to CD2 first.

Acc4(config)#interface range f0/23. 24

Acc4(config-if-range)#channel-group 1 mode desirable

Acc4(config)#interface port-channel 1

Acc4(config-if)#description Link to CD2

Acc4(config-if)#switchport mode trunk

Acc4(config-if)#switchport trunk native vlan 199

Then configure switch CD2 with matching settings.

CD2(config)#interface range f0/23. 24

CD2(config-if-range)#channel-group 1 mode desirable

CD2(config)#interface port-channel 1

CD2(config-if)#description Link to Acc4

CD2(config-if)#switchport mode trunk

CD2(config-if)#switchport trunk native vlan 199

Next configure the Acc4 side of the EtherChannel to CD1. Remember to use a different port channel number.

Acc4(config)#interface range f0/21. 22

Acc4(config-if-range)#channel-group 2 mode desirable

Acc4(config)#interface port-channel 2

Acc4(config-if)#description Link to CD1

Acc4(config-if)#switchport mode trunk

Acc4(config-if)#switchport trunk native vlan 199

Then configure switch CD1 with matching settings.

CD1(config)#interface range f0/21. 22

CD1(config-if-range)#channel-group 2 mode desirable

CD1(config)#interface port-channel 2

CD1(config-if)#description Link to Acc4

CD1(config-if)#switchport mode trunk

CD1(config-if)#switchport trunk native vlan 199

Verify the EtherChannels come up.

The port channels should show flags (SU) (Layer 2, in use) with member ports (P) (in port-channel). Verify on both sides of the port channel.

On the core/distribution layer switches you should see both the LACP and PAgP port channels up.

Static EtherChannel Configuration

Convert the existing uplinks between CD1 and CD2 to static EtherChannel.

Port channels 1 and 2 are already in use so we’ll use port channel 3.

Configure the CD1 side first.

CD1(config)#interface range g0/1. 2

CD1(config-if-range)#channel-group 3 mode on

CD1(config)#interface port-channel 3

CD1(config-if)#description Link to CD2

CD1(config-if)#switchport mode trunk

CD1(config-if)#switchport trunk native vlan 199

Then configure switch CD2 with matching settings.

CD2(config)#interface range g0/1. 2

CD2(config-if-range)#channel-group 3 mode on

CD2(config)#interface port-channel 3

CD2(config-if)#description Link to CD1

CD2(config-if)#switchport mode trunk

CD2(config-if)#switchport trunk native vlan 199

Verify the EtherChannel comes up.

How much total bandwidth is available between the PCs attached to Acc3 and the PCs attached to Acc4 now?

The port channels from the Acc3 and Acc4 switches towards the root bridge CD1 are up and forwarding. Spanning tree shuts down the port channels toward CD2 to prevent a loop.

The port channels from Acc3 and Acc4 facing the root bridge comprise two FastEthernet interfaces, so the total bandwidth available between the PCs attached to the different access layer switches is 200 Mbps.

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

With a mission to spread network awareness through writing, Libby consistently immerses herself into the unrelenting process of knowledge acquisition and dissemination. If not engrossed in technology, you might see her with a book in one hand and a coffee in the other.

Etherchannels with LACP

To follow on from the Etherchannel/LAG article, have a look at how to configure etherchannel…

LACP is a protocol that can be used to negotiate the parameters of the channel. This is used to bring the channel up initially, and then to monitor the channel, and add/remove links as required to respond to faults and repairs. LACP does not affect how traffic is load balanced across the channel. LAGs were originally defined in IEEE standard 802.3ad, and later revised in 802.1ax. This defines link aggregation as a whole, which includes the LACP protocol, which sits in the data-link layer, above MAC addressing. Notice that this is a definition for Link Aggregation (LAGs), not etherchannel. Etherchannel, while very similar to LAGs is technically a Cisco proprietary technology (after all, it includes support for PAgP). However, as mentioned previously, these are compatible technologies.

LACP is still used when configuring vPC’s. This is because the connecting device still uses a normal LAG. For more information, see the Advanced vPC article.

Using LACP to Manage an Etherchannel

There are several advantages to using LACP to manage the channel. One advantage is that LACP is non-proprietary, so etherchannels/LAGs can be build between devices of different vendors. An example of this is building an etherchannel between a Cisco switch and a NetScaler appliance. A second advantage is that LACP will not allow the channel to form if there are problems between the two devices. This gives the administrator an opportunity to troubleshoot the issue before production traffic is added to the channel.

In some cases, there may be more links in the channel than the platform will allow to be active. For example, the switch may allow 8 active links, but there may be 16 links in the channel. LACP will negotiate between the two endpoints do decide which links will become active, and which will be standby. If an active link goes down, LACP will automatically cause a standby link to become active.

There is another unusual scenario where LACP is very useful. Imagine two switches are connected together, and a link fails. Normally, both ends will know that the link has failed, and the link will go down, and the link-status light will go off. Now imagine that the two switches are connected via another device, such as a media converter or some type of network bridge. In this case, if the device between the switches fail, the switches may not be able to detect the link loss themselves. LACP however, is able to detect this fault, and is able to dynamically remove this link from the group, and promote a standby link to active (if available).

The first party in an LACP conversation is called the Actor, and the second party is called the Partner. The actor and partner send LACP frames called LACPDU’s to each other to share state information. No ‘commands’ are sent between the end devices, just the information in the LACPDUs, which the receiving LACP engine uses to make its own decisions. LACPDUs are sent to multicast MAC address 01-80-C2-00-00-02.

LACPDU keep alives are sent on a regular basis. If these LACPDU’s stop being received, the LACP engine knows that the link is down, and can take appropriate action. In addition the regular keep-alives, LACPDU’s can be sent as required to notify the other party about state changes (such as link up/down events) or when one endpoint does not know enough information about the other.

LACP enabled channels can operate in either active or passive mode. In active mode, the device will proactively start sending LACPDU’s. In passive mode, the device will not send LACPDU’s unless it receives LACPDU’s from another device first. This means that if either or both devices are in active mode, a channel will be able to form. However, if both are in passive mode, the channel will be unable to form, as LACPDU’s are not exchanged.

Each link in an LACP channel is assigned a link number. This is used when setting port-priorities, and depending on implementation, may match the hashing values that are used for traffic distribution.

Each port can have a priority assigned. The priority is combined with the port number to get the Port Identifier. This value is used when deciding on which ports need to be active and which need to be standby. If the values on both ends match, the System ID / System Priority is considered.

Each LACP device can be assigned a System Priority, which (on a Cisco switch) is globally configured. The system priority is combined with the MAC address of the channel to create the System ID. The system ID is used to break a tie if port priorities are the same on both endpoints (lower values have a higher preference).

Each channel has an Administrative Key. This is an LACP locally significant value assigned to each LAG. On a Cisco switch, this is the ID of the port-channel (port-channels are discussed further in the configuration section). This is used to make sure that the ports on the remote device are in the same channel. For example, if a channel on a local switch receives different keys from the remote switch, then the ports on the remote switch are not all in the same channel.

Static Etherchannels

A static etherchannel simply creates a channel without negotiating any parameters with the peer device. The upside to this is that the channel comes up instantly, as it doesn’t spend any time in negotiation. However, it does come with some significant downsides too.

The physical ports in a channel should all be configured the same way, in terms of link-speed, duplex, flow control, and so on. A dynamic channel will check that these values match before the channel comes up, and generate syslog messages if there is an error, to notify the admin. A static channel however, does not do this checking. This means that a simple misconfiguration on a port can cause problems for the whole channel.